Data Overview

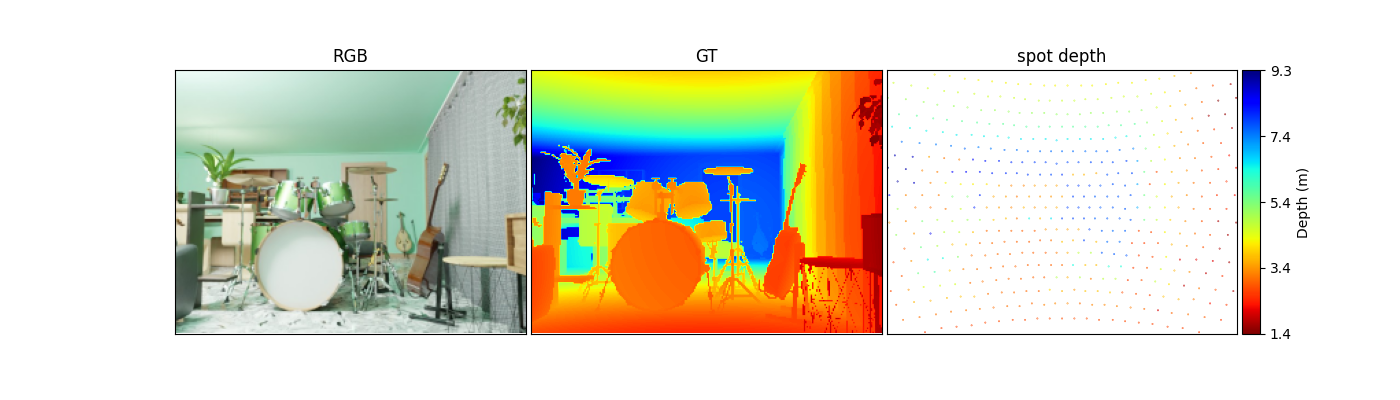

The training data contains 7 image sequences of aligned RGB and ground-truth dense depth from 7 indoor scenes. For each scene, the RGB and the ground-truth depth are rendered along a smooth trajectory in our created 3D virtual environment. RGB and dense depth images in the training set have resolution of 640x480 pixels.

The testing data contains

- A synthetic image sequence (500 pairs of RGB and depth in total) rendered from an indoor virtual environment that different from the training data.

- 48 image sequences of both static and dynamic scenes (1200 pairs of RGB and depth in total) collected from a iPhone 12Pro.

- 24 image sequences of static scenes (600 pairs of RGB and depth in total) collected from a modified phone.

RGB and dense depth images in the testing set have resolution of 256x192 pixels. RGB and spot depth data from the testing set are provided and the GT depth are not available to participants. The depth data in both training and testing sets are in meters.

Data Processing

Participants can load the data from a "data.list" file in both training and testing data folders.For loading and visualizing the data, please refer to source code.

Data Download

- RGB+ToF Depth Completion Training Images [Google Drive, ~18GB]

- RGB+ToF Depth Completion Validation Images [Google Drive]

- RGB+ToF Depth Completion Testing Images (input) (will be available at tesing phase)

Submission Requirements

For submitting the results, you need to follow these steps:

- Process the input images using the "data.list" file along with each data set. The

predicted depth data should be saved as a format of '.exr' with float32 precision.

A "data.list" file is also required for recording the file names of these results. Please refer to the test function of [our baseline code] for saving results and generating the "data.list".

The format of the submitted results should be as follow, note that all directories/files in bold should have the exact same names.Results

- iPhone_static

- data.list

- result_1.exr

- result_2.exr

- ......

- iPhone_dynamic

- data.list

- result_1.exr

- result_2.exr

- ......

- modified_phone_static

- data.list

- result_1.exr

- result_2.exr

- ......

- synthetic

- data.list

- result_1.exr

- result_2.exr

- ......

- iPhone_static

- A readme.txt file should contain the following lines filled in with the runtime per

image (in seconds) of the solution, the number of model parameters, and 1 or 0 if employs extra data for

training the models or

not.

Runtime per image [s] : 0.025Parameters : 1050290Extra Data [1] / No Extra Data [0] : 1Other description : GPU: Titan Xp; CPU: Intel Core i7-5500U etc. Extra data: DF2K

The last part of the file can have any description you want about the code producing the provided results (dependencies, link, scripts, etc.)

The provided information is very important both during the validation period when different teams can compare their results / solutions but also for establishing the final ranking of the teams and their methods. - Create a ZIP archive containing all the output image results named as above and a readme.txt. Note that the archive should not include folders, all the images/files should be in the root of the archive.

We recommend you use our [scoring program] to check your submission is correct.

Final Submission

Participants will need to submit results on Codalab server. The submission format is the same as in the validation phase. Please find details of submission format in the evaluation section of the competition.

Apart from submission on Codalab server, all participants should email to the challenge organizer mipi.challenge@gmail.com to complete the final submission.

The subject of the email should be: RGB+ToF Depth Completion MIPI-Challenge - TEAM_NAME

The body of the mail shall include the following information:

a) the challenge name

b) team

name

c) team leader's name and email address

d) rest of the team members

e) team name and user

names on RGB+ToF Depth Completion CodaLab competitions

f) executable/source code attached or download

links

g) Each team in the final testing phase should write up a factsheet to describe their solution(s) [factsheet

template]

h) download link to the results of all of the test frames

Note that the executable/source code should include pre-trained models or necessary parameters so that we could run it and reproduce results. There should be a README or descriptions that explain how to execute the executable/code. Factsheet must be a compiled pdf file. Please provide a detailed explanation.